Eye Research demonstrated that a shared AI canvas can convincingly imitate legitimate business content, such as an HR survey or internal login page. When these canvases are distributed via email or messaging platforms, they appear trustworthy because we associate them with well-known AI service domains. And trust is the currency of modern attacks. Read more in the full technical writeup.

How AI canvases are creating a new, overlooked cyber risk for businesses

ChatGPT, Google Gemini, and Claude.ai. Teams use AI platforms to draft content, build prototypes, analyse data, and collaborate. A newer capability, AI canvases allow users to create and publicly share HTML-based or application-like content through a simple link.

From a productivity and innovation perspective, this is a significant step forward. From a cybersecurity perspective, it introduces a new and largely unexamined risk surface that many organisations have not yet factored into their security strategy.

Eye Security’s research team recently explored whether shared AI canvases could be misused by threat actors. This was driven by a familiar pattern in cybersecurity: new collaboration features are often adopted faster than governance, security controls, and user awareness can keep up.

What we identified is not a reason for panic. It is, however, a timely signal for executive and IT leadership to understand how AI-enabled collaboration tools may be quietly expanding the attack surface.

AI canvases as dual-use tools: a new delivery channel for familiar cyber attacks

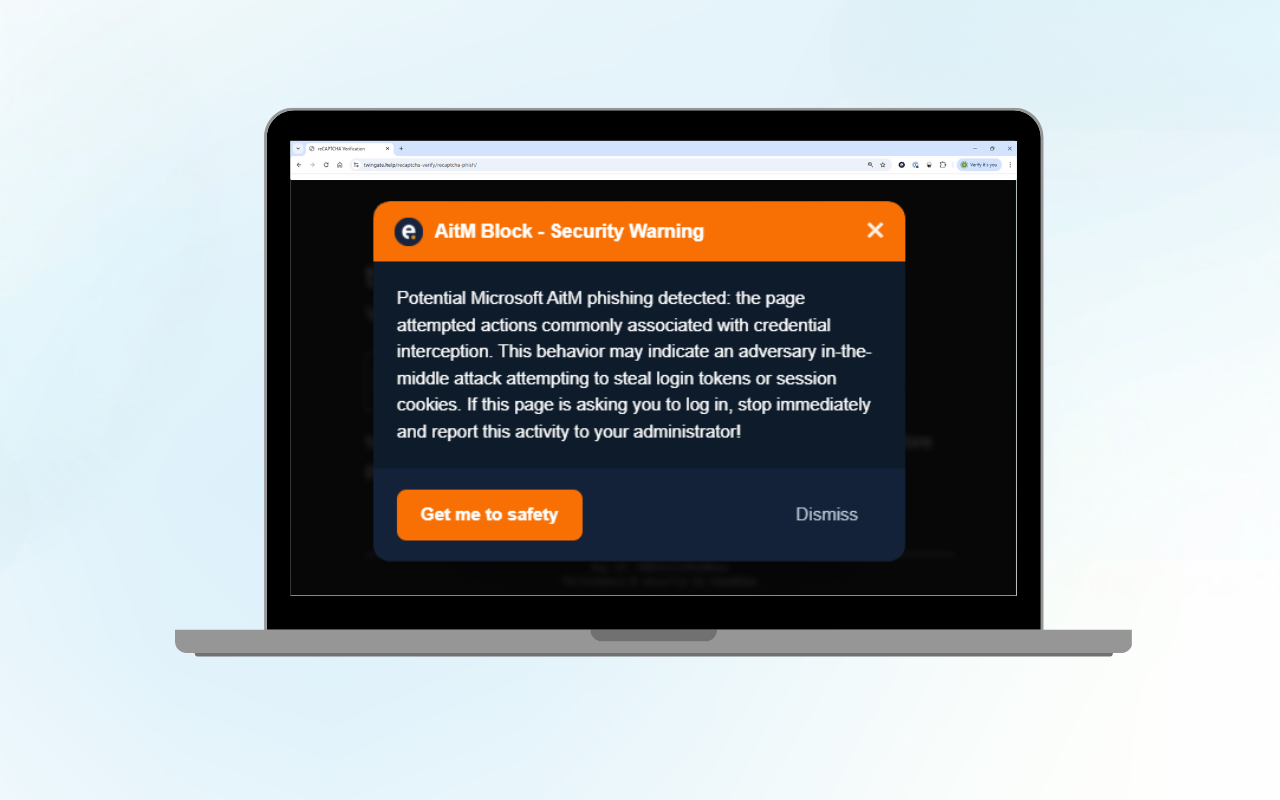

Social engineering remains one of the most effective ways to compromise organisations. Industry reports consistently show that phishing, credential theft, and user-assisted malware execution play a role in the majority of successful cyber incidents. CrowdStrike’s Global Threat Report 2025, for example, continues to highlight techniques such as FakeCaptcha and ClickFix as prevalent methods for initial access. Microsoft's Eye Security's trend report, The State of Cyber Incidents 2026, highlights that 70% of investigated incidents were Business Email Compromise (BEC), an attack known for its heavy reliance on identities and trust.

Eye Research demonstrated that a shared AI canvas can convincingly imitate legitimate business content, such as an HR survey or internal login page. When distributed via email or messaging platforms, these links appear trustworthy because they originate from widely recognised AI services.

This matters because trust is the currency of modern attacks. Users are far more cautious with unknown domains than with links associated with well-known technology providers.

What Eye Research uncovered about the world's top AI platforms

As part of this research, Eye Security examined several widely used AI platforms that support public sharing of content. The findings below reflect a snapshot in time and are intended to highlight design differences rather than assign blame.

ChatGPT: subtle, easy to overlook warnings

Supports publicly shareable canvases that can host interactive HTML content. User-generated content is indicated, but warnings are subtle and easy to overlook. Certain user actions can trigger permission prompts, which rely heavily on user judgement.

Google Gemini issues clear warnings

Generates high-quality interactive content and supports public sharing. Shared canvases display a clear warning that the content was created by another user, which may reduce the likelihood of misuse through social engineering.

Claude.ai: tight safeguards with preventative controls

Uses an Artifacts feature that allows code execution within the platform, but with tighter safeguards. Direct manual code editing is restricted, and automated scanning can block clearly malicious modifications. This approach demonstrates stronger preventive controls by default.

Other AI platforms

Several platforms support the link sharing of generated content, even if they do not offer full canvas functionality. Any service that allows public sharing of interactive or scriptable content should be considered part of the evolving attack surface.

In sum, the research shows that risk is not limited to a single vendor or feature. It is inherent to any platform that combines public sharing, trusted domains, and executable or interactive content.

Cybersecurity recommendations: what leadership teams can do right now to stay ready

Addressing AI risk does not require radical changes. It requires ownership, clarity, and access to the right expertise.

Include AI tools in your security governance

AI platforms used for business purposes should fall within the scope of risk assessments, acceptable use policies, and internal security guidelines. This includes understanding which tools allow public sharing and how links are exchanged internally.

Modernise security awareness training

Employees should be trained to recognise contemporary phishing techniques, including FakeCaptcha and ClickFix-style attacks delivered through trusted platforms. Regular, realistic simulations remain one of the most effective controls.

Ensure strong endpoint detection and response

When social engineering succeeds, endpoints are where malicious activity becomes visible. A well-configured EDR solution, monitored continuously, is essential to detect suspicious behaviour even when the initial interaction looks legitimate.

Investigate alerts consistently and thoroughly

Detection only creates value when alerts are reviewed by experienced security professionals. Prompt investigation reduces dwell time, limits impact, and prevents repeat incidents.

Maintain centralised visibility through a SIEM

A properly tuned SIEM helps correlate endpoint, browser, and cloud activity. This context is particularly important when new delivery channels emerge.

Prepare for response and recovery

Resilience depends not only on prevention, but also on the ability to respond and recover quickly. Organisations should have confidence in both their technical response capabilities and their financial readiness.

The bigger picture: securing innovation without slowing it down

AI-driven productivity tools are here to stay. They will continue to evolve, and they will continue to reshape work. The organisations that benefit most from this shift will be those that combine innovation with disciplined risk management.

At Eye Security, we see this as a familiar challenge. New technology expands opportunity and complexity at the same time. Our role is to help organisations navigate that complexity with calm, expert guidance, continuous monitoring, and integrated protection that spans both technical and financial risk.

By pairing 24/7 expert-led threat detection and response with security-informed cyber insurance, we help leadership teams maintain confidence even as the attack surface changes.

Editor's note: AI canvases are not inherently unsafe, and there is currently no evidence of large-scale exploitation through these features. The scenarios explored by Eye Security are hypothetical and executed in a controlled research environment. However, cyber risk tends to emerge incrementally as new platform capabilities are combined with well-established attacker techniques. From this perspective, we take the example of AI canvases as an early warning to illustrate how trusted tools can be misused if security design, user awareness, and monitoring do not evolve in parallel with innovation.

Frequently Asked Questions (FAQ): AI Canvases and Cyber Risk

What are AI canvases?

AI canvases are interactive workspaces within AI platforms such as ChatGPT, Google Gemini, and Claude.ai. They allow users to create and share HTML-based or application-like content through a simple link. Common use cases include prototypes, surveys, forms, dashboards, or collaborative documents.

Why are AI canvases relevant from a cybersecurity perspective?

AI canvases introduce a new, often overlooked attack surface. Because they can host interactive content and are shared via trusted AI platform domains, they may be perceived as inherently safe by users. This trust can be misused to deliver familiar social engineering attacks through a new channel.

Are AI canvases inherently unsafe?

No. AI canvases are not inherently unsafe, and there is currently no evidence of large-scale exploitation of these features in the wild. Eye Security’s research explored hypothetical scenarios in a controlled environment to understand how existing attack techniques could potentially be adapted to new platform capabilities.

What types of attacks could be delivered through AI canvases?

AI canvases do not enable new attack techniques. Instead, they can be used as a delivery mechanism for well-known methods such as phishing, FakeCaptcha, ClickFix, or credential harvesting. These attacks rely heavily on user trust rather than technical vulnerabilities.

Which AI platforms were examined and what differences did Eye Research observe between platforms?

Eye Security reviewed several widely used platforms that allow public sharing of content, including ChatGPT, Google Gemini, and Claude.ai. The goal was to understand differences in design choices, safeguards, and user warnings, not to single out individual vendors.

The research found meaningful variations. ChatGPT supports shared canvases with interactive content, but user warnings are subtle and may be overlooked. Google Gemini displays clear warnings when content was created by another user, which can reduce the effectiveness of social engineering. Claude.ai applies tighter preventative controls through its Artifacts feature, including restricted code editing and automated scanning. These differences highlight that risk is influenced not only by functionality but also by platform design.

Is this risk limited to specific AI platform vendors?

No. The underlying risk applies to any platform that combines public content sharing, trusted domains, and interactive or executable elements. As AI-powered collaboration tools continue to evolve, similar considerations will apply across the ecosystem.