The malicious use of AI is evolving, making attacker tactics increasingly sophisticated. Social engineering, the most common form of cyberattack, is a prime example. The effective use of deepfakes and LLMs to fabricate personalised vishing attacks and phishing messages demonstrates how generative AI enhances the sophistication, scale, and therefore success of social engineering campaigns. This article explores the use of AI as an attack tool, examines where malicious and “normal” uses overlap, and offers recommendations for the safe use of AI-powered tools.

Introduction: LLMs for speed and scale

AI-powered applications are profoundly impacting attack vectors such as social engineering and malware. Large Language Models (LLMs) lower the entry barrier, enabling attacks to be executed more quickly and at a greater scale. Numerous LLM-based agents can automate significant portions of a cyberattack. Accelerated data analysis and automated code generation allow these attacks to be carried out on a scale previously unimaginable.

Open-source initiatives further enable malicious actors to access and exploit freely available technologies. These dual-use tools, applications enabling both legitimate and criminal uses, illustrate how the same technology can serve benign and malicious purposes. In this article, we look at the intersections between AI as an attack tool and its habitual applications, while providing recommendations for safe AI tool usage.

AI as an attack tool: the impact of LLMs

The use of LLMs in cybersecurity depends largely on the intent of the actor. Many scenarios fall into a dual-use category: while these tools have legitimate applications, they can also be easily repurposed for malicious activity, providing a powerful foundation for successful social-engineering campaigns.

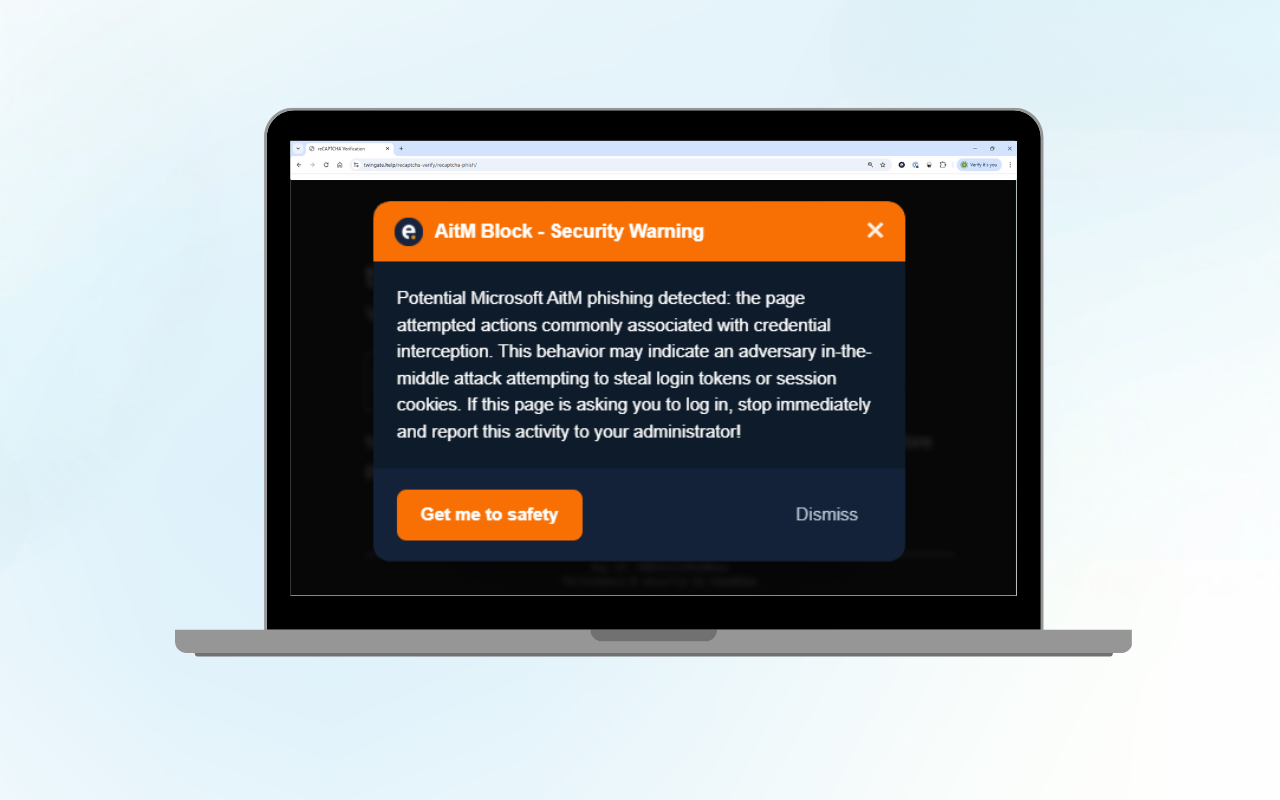

This is largely due to the widespread availability of high-quality LLMs, which enables actors with moderate technical skills or limited language proficiency to craft highly convincing phishing messages. AI allows attackers to generate personalised content, mimic specific writing styles, and even embed contextually relevant details, making phishing attempts increasingly difficult to detect. The rise of deepfakes, AI-generated images and videos that appear authentic, further amplifies the threat.

LLMs also facilitate the creation of malicious code, allowing less experienced threat actors to develop and distribute malware more quickly and efficiently. Seasoned attackers, meanwhile, can scale operations to inflict greater damage. Most open-source LLMs incorporate only basic safeguards against abuse, which are often trivial to bypass.

Beyond content creation, LLMs can even automate components of ransomware campaigns, including the acquisition of cryptocurrency and facilitation of ransom payments. Many victims are unfamiliar with the process for paying a ransom, prompting cybercriminals to offer multilingual “support” services to guide them through the transaction.

AI-powered cyberattacks: when AI becomes a weapon

Many cybersecurity tools are based on automated, AI-supported detection of vulnerabilities but this also introduces new risks. Numerous open-source tools have been developed that are used not only by organisations but also by cybercriminals. Open platforms are often leveraged to distribute ML models infected with malware. If malicious code is embedded in trained models or data, the risk of it being deployed undetected increases significantly.

Reinforcement learning systems are also becoming tools for malicious activity. These systems can interact with their environment, adapt, and learn, with the aim of executing long-term attack strategies. Their potential application is broad:

- Some AI tools simulate target networks to plan attack vectors and identify optimal data exfiltration paths.

- Others are trained on a specific target network, although these trained agents are difficult to transfer to other environments.

Several tools now support penetration testing with AI assistants. While valuable for defenders, such tools can also lower the entry barrier for attackers by automating parts of the intrusion process. AI is increasingly used to partially automate the attack chain, though so far there is no evidence of fully autonomous AI-based cyberattacks being used in practice.

AI-generated malware: from theory to possibility

Malware, such as ransomware, trojans, or worms, is typically deployed on target endpoints through exploits or social engineering to cause damage. With the rise of AI-driven attack tools, classic defences like antivirus software are no longer sufficient. AI models, ranging from LLMs to GANs (Generative Adversarial Networks) and reinforcement learning systems, are being applied to cyberattacks, even by less technically skilled actors.

- Malware creation via natural language: Basic malicious code can now be generated with simple prompts. However, no AI system currently exists that can independently develop sophisticated malware with advanced obfuscation techniques or zero-day exploits, since the required training data is expensive and hard to access.

- Malware modification: AI can modify existing malware to make it more difficult to detect, typically by altering features rather than source code. While proof-of-concept tools exist, this remains largely in the academic realm and requires significant expertise and resources.

- AI integration into malware: AI could be embedded into malware functionality. For instance, through polymorphic engines that dynamically change code to evade detection, or by training AI models to mimic user behavior so malicious activity blends in with normal patterns. To date, however, there is no evidence of practical use, though such scenarios are frequently discussed.

What is clear is that the development of secure algorithms and defensive protocols is essential to counter these emerging threats before they mature into real-world attack tools.

The future of AI in cybersecurity: from threat amplifier to defence enabler

Again, AI-enabled applications are reshaping the threat landscape. They make cyberattacks faster, more scalable, and more sophisticated. To keep pace, companies must not only increase the speed and scope of their defensive efforts but also elevate cybersecurity to a strategic business priority.

Key measures include:

- Building a modern, adaptive IT infrastructure

- Operating a 24/7 Security Operations Center (SOC)

- Training employees to recognise and resist social engineering attempts

- Enforcing multi-factor authentication (MFA)

- Strengthening patch management and vulnerability response

Teaming up with cybersecurity providers

Close collaboration with cybersecurity providers and the use of managed security services can also help strengthen and maintain IT security at a high level.

Secure AI development: the role of developers in cyber defence

AI systems developers must embed secure algorithms, protocols, and protective features directly into AI tools. To stay ahead, developers should actively monitor the latest attack vectors and threat trends, adapting their systems to new risks to minimise exposure.

Conclusion: incorporating AI in your defence layer

While AI can empower attackers, it also offers powerful advantages for defenders. Organisations are encouraged to adopt AI-driven tools for threat detection, vulnerability management, and automated response to increase both the speed and scope of defensive activities. This extends to strategic initiatives such as building a modern IT infrastructure, implementing a 24/7 Security Operations Center (SOC), and conducting training programs to strengthen protection against social engineering attacks, as well as tactical measures like introducing multi-factor authentication and improving patch management processes.

At Eye Security, we deliver a fully managed extended detection and response service that combines XDR and Attack Surface Management (ASM) with threat hunting capabilities, while being backed by a 24/7 SOC and in-house incident response. It is this comprehensive approach that strengthens resilience against today’s and tomorrow’s AI-powered threats.

Dual-use AI Tools in Cybersecurity: Frequently Asked Questions

What makes AI such a powerful tool for cybercriminals?

AI, and particularly large language models (LLMs), lower the barrier to entry for cyberattacks. They enable attackers to generate convincing phishing messages, automate parts of ransomware campaigns, and scale malicious operations far beyond what was previously possible.

Can large language models (LLMs) really generate malware?

Yes, but with limitations. LLMs can produce basic malicious code snippets or modify existing malware to evade detection. However, no current AI system can independently create sophisticated, zero-day level malware without human expertise and high-quality training data.

How are deepfakes and AI-driven phishing attacks used in practice?

Cybercriminals use AI to generate realistic audio, video, and text content. For example, deepfake voice recordings are used in vishing (voice phishing) to impersonate executives, while AI-generated phishing emails are tailored with personal details that make them harder to spot.

Are fully autonomous AI cyberattacks already happening?

Not yet. While AI can automate parts of the attack chain, such as malware development, phishing distribution, or ransom payment instructions, there is currently no evidence of fully autonomous AI-based attacks being deployed in the wild.

What are “dual-use” AI tools in cybersecurity?

Dual-use refers to AI applications that have both legitimate and malicious uses. For example, penetration testing tools with AI assistance can strengthen defenses when used by security teams, but also lower the barrier for attackers if misused.

How can companies defend against AI-powered social engineering?

Defences should combine human awareness with technical safeguards. Critical measures include training employees to recognise manipulation attempts, enforcing multi-factor authentication, operating a 24/7 Security Operations Center (SOC), and adopting AI-driven detection tools.

What role do developers play in preventing malicious AI use?

Developers must integrate security by design. This means embedding secure protocols, testing algorithms against potential abuse, and ensuring regular patching and updates. By anticipating how attackers might misuse their tools, developers reduce enterprise exposure.

How can businesses use AI securely without increasing risk?

AI should be integrated into the defence layer, not just into operations. Companies can safely use AI for threat detection, vulnerability management, and automated response when paired with strong governance, monitoring, and trusted security providers.