To fully unlock the potential of AI systems in cybersecurity, organisations need effective quality management processes. Best-practice guidelines for the responsible use of AI algorithms and LLM data should be accompanied by privacy-friendly IT system design and robust cybersecurity measures. Given the many unresolved legal questions in this area, organisations should address them in an open and structured manner.

AI systems also introduce a range of legal and regulatory risks. Since not all methods for validating the accuracy and performance of AI systems are reliable, companies must carefully assess the regulatory and operational risks associated with these technologies.

Definitions and foundations: understanding AI and AI systems

What is Artificial Intelligence (AI)?

Artificial Intelligence (AI) refers to the development of computer systems capable of performing tasks and making decisions that typically require human intelligence. These systems can learn from experience, recognise patterns, and solve problems without being explicitly programmed. AI systems encompass both software and hardware that use AI to act “rationally” in physical or digital environments. They are deployed across a wide range of applications, from automating business processes to enhancing cybersecurity. The importance of AI systems continues to grow as they help organisations operate more efficiently and securely.

Machine learning and deep learning: core AI techniques

Machine Learning (ML) is a widely used subfield of AI. ML algorithms and techniques enable systems to learn from data and make decisions without explicit programming. By analysing large datasets and identifying patterns, ML continuously improves system performance, enabling smarter, more adaptive AI solutions.

Deep Learning (DL) is a specialised subset of machine learning that uses artificial neural networks inspired by the human brain. These networks can tackle complex tasks such as image recognition, natural language processing, and autonomous decision-making. By applying deep learning, organisations gain deeper insights from data and can develop more advanced, AI-driven solutions.

AI, cybersecurity, and data privacy: a complex intersection

Requirements for data privacy and explainability present a real challenge for many AI users. The growing complexity of machine learning and the increasing use of deep learning algorithms, which often function as black boxes, means that highly accurate results can be achieved without a clear understanding of how those results were generated.

The benefits, however, remain significant. When applied to IT security, AI enables security teams to efficiently process vast amounts of data, detect and analyse security events of varying complexity, maintain real-time oversight of system status, and respond to cyberattacks.

According to IBM’s Total Cost of Data Breach Report 2024, companies that use AI in their cybersecurity operations save an average of $3.05m (approximately €2.83m) and 74 workdays in addressing security incidents.

How AI is applied in cybersecurity

The following provides an overview of the most common applications of AI for protecting critical enterprise systems against cyberattacks:

Intrusion Detection and Prevention Systems (IDPS)

AI can be integrated into Intrusion Detection and Prevention Systems (IDPS) to identify attackers and trigger predefined protective measures, such as blocking IP addresses or isolating compromised systems.

Intrusion Detection Systems (IDS) can autonomously detect attacks or assist security teams in identifying them. Supervised machine learning (ML) algorithms use historical attack data as training input to recognise similar events. However, supervised ML struggles to detect novel attack patterns. Unsupervised anomaly detection, on the other hand, identifies behaviors that deviate significantly from the norm but may generate a higher number of false positives.

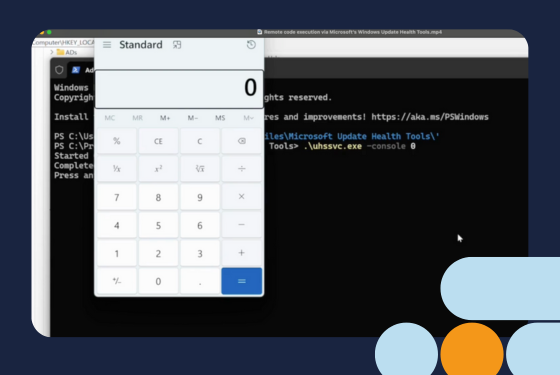

Incident response automation

AI-driven automation can also be applied in threat mitigation, such as Incident Response (IR) automation. AI systems detect threats and respond according to predefined playbooks, helping minimise risk and accelerate reaction times. Typical IR automation steps include attack detection, isolation of compromised systems, blocking malicious activity, and recovery via backups. Regular system reviews additionally ensure AI-driven IR remains efficient and secure.

Security Information and Event Management (SIEM)

SIEM solutions aggregate comprehensive system data to detect and respond to incidents in real time. AI enhances SIEM capabilities by analysing both structured data, such as logs, and unstructured data, including app crash reports, employee-submitted alerts, or AI-generated visualisations of threats.

Predictive threat analytics

AI can also predict future threats by analysing datasets and identifying patterns. This goes beyond historical cyberattacks; AI systems use threat intelligence to collect data from security logs, the dark web, and threat databases, enabling organisations to anticipate attacks and adapt defences proactively.

Incidents can be detected by analysing data from ongoing attack campaigns or monitoring forums. Natural Language Processing (NLP) is often employed to assess potential communication between threat actors and identify risks.

The imperative of AI and automation in cybersecurity: Without AI and automation, managing modern cyber risks is nearly impossible. At the same time, using AI in cybersecurity requires a robust quality management strategy to ensure the technology is effective, safe, and aligned with organisational security goals.

What are the risks of AI in cybersecurity?

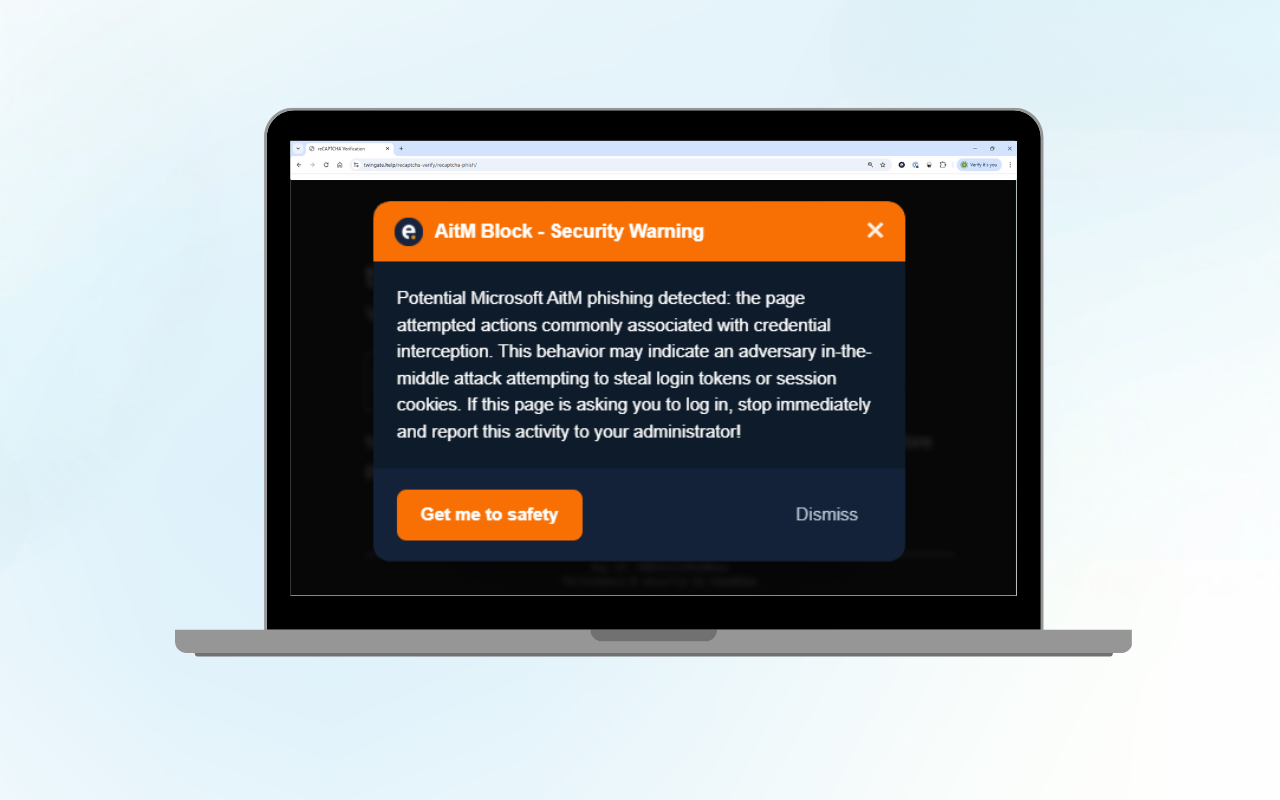

AI systems can also be manipulated by attackers to produce false or misleading results. This can create a range of issues, including violations of data protection laws and compromises to the security of systems and applications. For example, attackers could alter AI models so that they fail to detect legitimate threats or mistakenly classify harmless activities as dangerous.

Regular reviews and updates to AI systems ensures they remain secure and reliable. Additionally, organisations should educate employees about the risks associated with AI systems and encourage caution when interacting with these technologies. Providing training and clear guidelines can promote the safe and responsible use of AI tools.

In summary, by combining technical safeguards with employee education, companies can minimise AI-related risks and strengthen their overall security posture.

What are the recommended AI quality management criteria and measures?

The following outlines key criteria and implementation measures first presented in the Fraunhofer Institute for Secure Information Technology’s whitepaper “Eberbacher Gespräche über KI, Sicherheit und Privatheit”. These recommendations focus on ensuring responsible, secure, and legally compliant AI use within corporate cybersecurity frameworks.

Minimum quality standards for AI in cybersecurity

Machine Learning (ML) systems often demonstrate different performance in controlled lab environments compared to real-world operational settings. It can be difficult to determine the exact basis on which results are generated. Companies should assess AI systems using metrics such as False Acceptance Rate (FAR) and False Positive Rate (FPR), alongside transparency regarding the training data employed.

Key takeaway: Decisions made by AI systems must be verifiable, and outputs should be reproducible to ensure reliable cybersecurity performance.

Legally compliant data anonymisation in AI systems

Once raw data is fed into an AI model, removing it is typically impractical without retraining the model, which is costly and time-consuming. Organisations should therefore implement privacy-preserving mechanisms to safeguard data integrity before processing.

While Big Data and AI can enhance business insights, they pose challenges to data privacy. Analyses can create links between data points, undermining intended anonymity. In some cases, neural network training sets may allow reconstruction of original data, potentially revealing personal information.

Best practice: Develop verifiable protocols for pseudonymisation or anonymisation of personal data before it is used in AI processing, ensuring compliance with privacy regulations.

Clear liability frameworks

Unclear liability rules can deter companies from fully leveraging AI for cybersecurity. Without a clear understanding of legal risk, organisations may avoid otherwise plausible AI applications.

Following the adoption of the EU AI Act, the European Commission released guidelines on AI liability, aiming to create a harmonised legal framework and address gaps in accountability for AI-driven outcomes.

Establishing a code of conduct for safe AI use

Several frameworks exist to guide ethical and safe AI deployment, such as the Hambach Declaration and recommendations from Germany’s Federal Data Ethics Commission. A corporate Code of Conduct for AI should be:

-

- Verifiable and auditable: Data journeys, from collection to storage and processing, must be traceable and validated.

- Ethically grounded: Ensuring responsible use while minimising risk to individuals and systems.

Implementing AI risk management measures

Additional challenges stem from the diverse range of threat actors who increasingly employ AI-driven methods as offensive tools. These actors may include financially motivated cybercriminals as well as state-sponsored groups targeting critical infrastructure. In this regard, comprehensive risk management strategies should encompass the prioritisation of threat actors and the establishment of tiered priority levels for potential target locations.

Key measures include:

- Threat prioritisation: Identifying high-risk attackers and vulnerable assets.

- Risk stratification: Establishing priority levels for systems and locations based on potential impact.

- Recommended framework: The AI TRiSM (Trust, Risk, and Security Management) Framework by Gartner provides a structured approach to continuous AI oversight, including (1) anomaly detection for AI-generated content, (2) data governance and protection, and (3) reduction of application security risks.

Gartner predicts that organisations applying AI TRiSM controls will reduce inaccurate or unauthorised outputs by at least 50% by 2026, minimising errors that could lead to faulty decisions.

Conclusion and outlook

Organisations are increasingly using AI-driven systems to enhance their security posture, enabling faster threat detection, more effective attack mitigation, and improved forecasting of emerging risks. At the same time, the adoption of such technologies introduces new challenges that must be addressed with equal rigour, including limited model transparency, data protection concerns, and the absence of clearly defined liability frameworks.

Against this backdrop, organisations are encouraged to establish quality standards for AI systems and implement privacy-friendly mechanisms, such as data anonymisation, from the outset. With a well-structured approach to quality and risk management, AI can not only reduce security risks but also serve as a strategic tool for maintaining a competitive advantage.

AI in Enterprise Cybersecurity: Frequently Asked Questions

What is the role of AI in cybersecurity?

AI is used to detect threats faster, prevent attacks, and predict future risks. Machine learning (ML) and deep learning (DL) help organisations identify patterns, automate threat detection, and respond to incidents in real time. AI transforms cybersecurity from a reactive practice into a proactive strategic tool.

Which AI applications are most common in enterprise cybersecurity?

- Intrusion Detection and Prevention Systems (IDPS): AI can detect attackers and trigger automated responses, such as IP blocking or system isolation.

- Incident response automation: AI systems execute predefined playbooks, isolate compromised systems, and accelerate recovery.

- Security Information and Event Management (SIEM): AI aggregates structured and unstructured data to detect incidents in real time.

- Predictive threat intelligence: AI analyses historical and external data, including the dark web and threat databases, to forecast future attacks.

How does AI amplify social engineering attacks?

AI, including LLMs and deepfake tools, allows attackers to generate highly personalised phishing, vishing, and other social engineering campaigns. It can mimic writing styles, create contextually relevant content, and scale attacks rapidly, making them harder to detect.

Can AI be used to create malware?

Yes. AI can assist in:

- Malware generation using natural language: Basic malicious code can be created from prompts.

- Malware modification: AI can make existing malware harder to detect through feature manipulation.

- AI integration into malware: Polymorphic engines or behavior-mimicking AI could theoretically make attacks stealthier, though no practical applications have been widely observed yet.

What risks do AI systems pose in cybersecurity?

- Manipulation by attackers: AI models can be altered to misclassify threats or over-flag harmless activity.

- Data privacy concerns: AI training datasets can unintentionally expose personal information if not properly anonymised.

- Liability and regulatory uncertainty: Companies need clear guidelines on AI use to avoid legal risks.

What measures can companies take for AI quality management?

- Minimum quality standards: Monitor false positives and false acceptance rates, and ensure transparency of training data.

- Data anonymisation: Implement privacy-preserving techniques before feeding data into AI models.

- Liability clarity: Align with AI Act guidelines and national regulations to reduce legal exposure.

- Code of Conduct: Establish internal policies for secure AI usage, ensuring traceability of data and model decisions.

- AI TRiSM framework adoption: Implement trust, risk, and security management controls for continuous monitoring and risk reduction.

How can AI become a strategic enabler for cybersecurity?

When paired with robust quality and risk management practices, AI can:

- Minimise security incidents

- Enhance incident response speed

- Strengthen regulatory compliance

- Provide a competitive advantage by protecting operational continuity and stakeholder trust

Why is explainability important in AI cybersecurity systems?

Explainable AI ensures that organisations can understand how decisions are made. This is critical for compliance, incident investigation, and validating threat detection accuracy. Without explainability, high-performing models may be mistrusted or underutilised.

What should organisations prioritise for AI deployment in cybersecurity?

- Building secure, adaptive IT infrastructure

- Continuous monitoring via 24/7 Security Operations Centers (SOC)

- Employee training against social engineering

- Implementing multi-factor authentication (MFA) and patch management

- Using AI for threat detection, vulnerability management, and automated response

What is the outlook for AI in enterprise cybersecurity?

AI adoption is accelerating. Organisations that proactively integrate AI with structured quality standards, privacy measures, and risk management frameworks will reduce cybersecurity threats and leverage AI as a strategic business advantage.