Generative AI is transforming how businesses operate. Tools such as ChatGPT, Copilot, and DeepSeek enable teams to move faster, automate tasks, and innovate in ways that were hard to imagine just a year ago. But with this innovation comes a new, largely invisible risk known as Shadow AI.

This is why our research team at Eye Security invented a concept to turn prompt injection into a compliance and end-user awareness tool for security teams and CISO offices.

👉 Try out the prompt generator

Shadow AI: the risk behind AI productivity

The unsanctioned use of SaaS apps and cloud services is an issue all CISOs are familiar with. Shadow AI is its next, and far more complex, evolution.

Employees are increasingly experimenting with AI tools that are outside corporate governance, pasting sensitive emails, corporate documents, and source code into chatbots to get instant summaries or automate tasks. These activities are rarely malicious and are often driven by curiosity or efficiency. But the result is the same. Sensitive data leaves the organisation’s control.

When this happens, several risks emerge:

- Data leakage: confidential information, PII, and intellectual property may be stored or processed by external AI providers.

- Compliance exposure: under GDPR, NIS2, and ISO 27001, companies must demonstrate data processing control, which is nearly impossible with unmanaged AI.

- Audit gaps: shadow AI leaves no trace, no logs, and no auditability.

- Awareness gap: employees may not realise that “asking ChatGPT for help” might count as a data breach.

And here lies the CISO’s dilemma. How do you enable innovation and AI exploration without losing control of your data or failing compliance audits? Specialised DLP solutions or browser extensions can help, but they only cover corporate devices, whereas a personal device like a smartphone can be used to bypass a lot of these solutions.

Using prompt injection for good: from threat to opportunity

At Eye Security, we like thinking outside of the box and we asked ourselves a different question:

"What if the same techniques that attackers use to trick AI models could be used to protect them?"

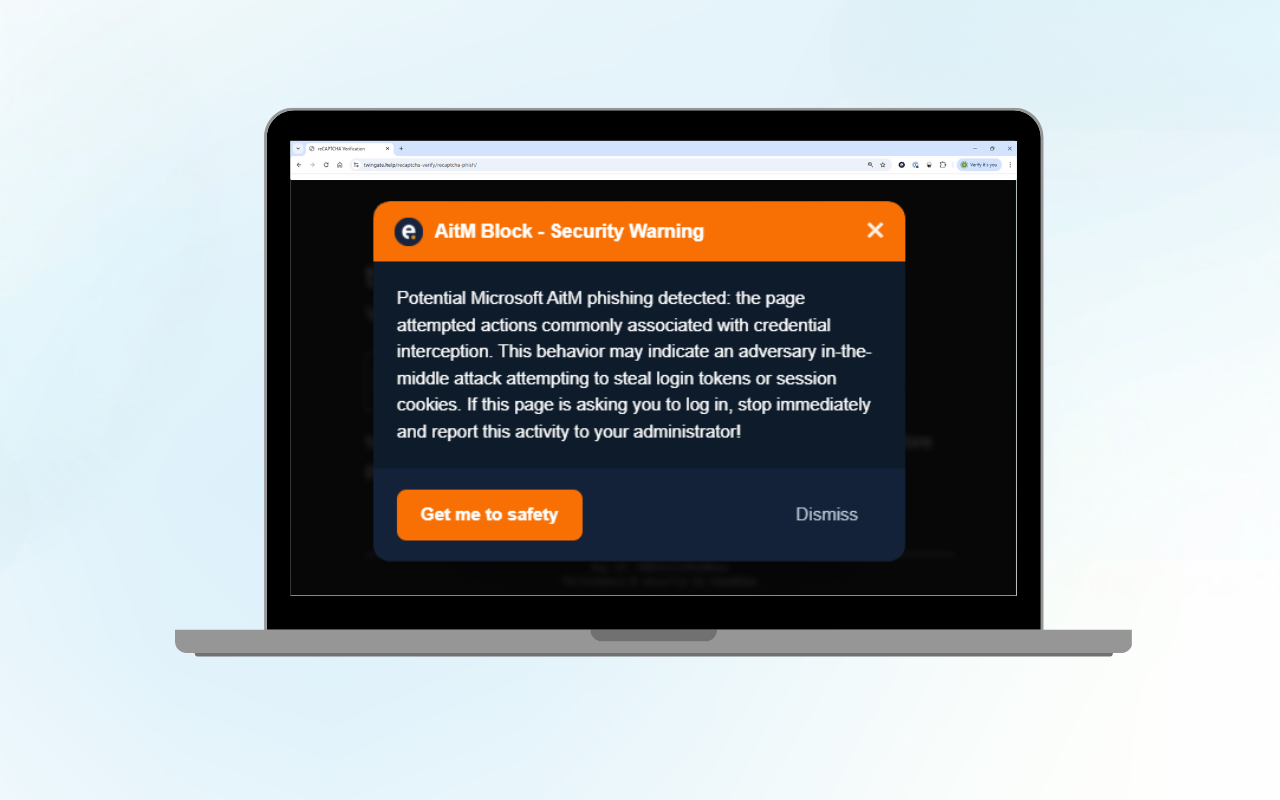

Our research team was already experimenting with prompt injection, a known method used to manipulate AI models. They discovered a vulnerability in Copilot using prompt injection earlier this year.

But instead of using prompt injection offensively, we now turned it into a defensive awareness mechanism. The result is a new concept and an accompanying open-source prototype: Prompt Injection for Good. This prototype represents a practical concept that allows CISOs and security enthusiasts to experiment with embedding AI-aware disclaimers into corporate files and emails.

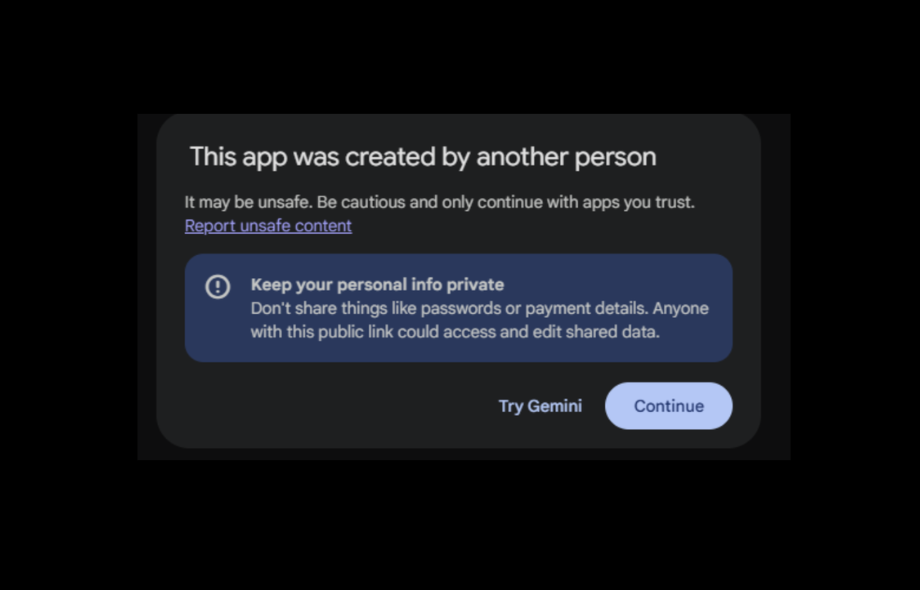

When an employee uploads a protected document into a personal AI tool (such as ChatGPT or Copilot), the AI is nudged to display a disclaimer such as: “This document contains sensitive corporate data. Please be aware of the risks of sharing it with untrusted third parties such as unsanctioned chatbots.”

Instead of blocking productivity or punishing curiosity, our concept provides just-in-time awareness.

What is defensive prompt injection

At its core, our approach flips the script on the usual threat of AI prompt injection. While attackers exploit this vulnerability to manipulate AI models by adding "malicious instructions" that AI will follow, we leverage the same mechanism proactively to instruct the AI model to warn the end-user or even block processing (sensitive) data all together when corporate data is uploaded into generative tools beyond the organisation’s control.

Generate your own defensive prompt

Below is a small interactive tool for security teams and CISOs to test the concept. You simply supply your organisation’s name, if you want to block parsing or just warn users and a corporate-preferred AI platform (like Microsoft Copilot) to guide users to be compliant with corporate policies.

Prompt Injection Generator

Block Processing: Shows only disclaimer and stops. Use when you want to completely prevent LLM processing of sensitive data.

About Prompt Injection Generator

This tool generates OWASP LLM02:2025 compliant disclaimers to protect your organization's sensitive data when using Large Language Models (LLMs).

How it works: The generated prompt instructs LLMs to display a legal disclaimer before processing any content, warning users about data privacy risks and establishing ownership boundaries.

Use cases: Perfect for organizations that need to protect customer data, proprietary information, or any sensitive content when employees use AI tools.

Learn more: Visit our tech blog for detailed insights on LLM security best practices and implementation guides.

This snippet can then be inserted into your existing artefacts (signatures, headers, footers) so that when users inadvertently paste content into tools like ChatGPT, Microsoft Copilot, or other AI assistants, the prompt will trigger a visible warning or disclaimer to the AI and (through its response) to the user.

Why this matters

-

Standard DLP controls often cover managed devices and sanctioned SaaS. At the same time, many AI tools are used from personal devices or unsanctioned channels, leaving a blind spot.

-

By embedding or even hiding a prompt inside a corporate document or signature, we treat the document itself as a protective sentinel: the moment it hits an AI tool, a “heads‑up, sensitive data” message appears, raising awareness rather than blocking productivity.

-

It creates a nudge culture rather than a ban culture, helping employees see the risk rather than feel punished for curiosity.

Practical scenarios

Here are typical deployment cases you can consider to adopt within your organisation:

-

E‑mail signatures

Many companies already append legal disclaimers in their signature blocks. A defensive prompt snippet can be added here so that when someone copies a thread or content and pastes into a chatbot, the AI is prompted with something like:

This doesn’t stop the user from pasting, but it causes the AI to surface the warning when the full email thread is uploaded to an AI platform, educating the user instantly.

-

Document headers/footers (via Microsoft Purview or similar)

Many businesses use Microsoft Purview or other governance tools to apply headers/footers to Word, Excel, PowerPoint files. Or Google Docs. Here you embed the prompt text as a hidden header or visible footer. When a user exports or uploads a file and the AI processes it, the header triggers the awareness flow.

This example Microsoft Word header can also be made invisible by applying a white color or making the text very small so it's invisible to humans, but visible to AI tools.

-

Exports from SaaS platforms (e.g., Confluence Cloud)

Some platforms allow you to specify default header text when exporting data, like a PDF. This is another insertion point. Add the defensive prompt into the export header. Employees may still export and upload content, but the moment the AI sees the prompt in the file, it shows the message.

Similar to the Microsoft Word example, header text within PDF files can be made invisible so it is invisible to humans, but visible to AI tools.

-

Miscellaneous other channels (e.g., CRM systems like HubSpot email signatures)

If your organisation uses centralised signature management in HubSpot (or similar marketing/CRM platforms), you can embed your prompt snippet widely across marketing, sales, or internal‑comms emails. The broader the deployment, the more consistent the awareness signal.

So, did we solve Shadow AI?

Shadow AI is not a problem you can “solve” in a binary way. It is an evolving behaviour, a byproduct of rapid innovation, blurred boundaries between personal and professional data, and a growing appetite for AI-assisted work. But rather than trying to stop this wave, we have built a way to steer it safely.

To dive deeper into the technical implementation and explore this idea hands-on, we have published an in-depth breakdown on our research blog alongside an open-source framework that allows your security teams to test and deploy defensive prompt injections in real scenarios.

👉 Explore our research blog and experiment with the open-source prototype

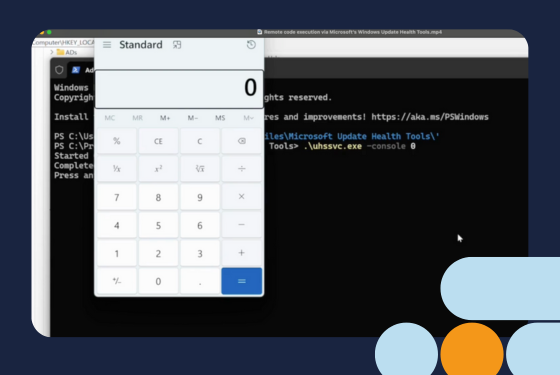

The tool requires some technical expertise to run, but it is designed for security teams who want a comprehensive way to evaluate different defensive prompt strategies across a variety of content types, distribution methods (headers, signatures, exports), and AI platforms.

It supports testing on major models like ChatGPT, Microsoft Copilot, Claude, DeepSeek, and more, helping you better understand how your sensitive documents interact with these tools in the wild.

So, did we solve Shadow AI? No. But we are trying to make it visible, testable, and addressable. And that’s a big step forward.

What is the value for the C-suite?

For CISOs presenting to the board, prototyping defensive prompt injection (in test environments) help illustrate a mature approach to AI risk:

- Proactive governance. You are shaping internal controls.

- Balanced decision-making. You are aligning productivity with data protection, showing risk, cost, and resilience in harmony.

- Regulatory readiness. You are already mapping future compliance requirements before they become mandatory.

- Cultural resilience. You are helping every employee become a guardian of company data.

Ultimately, this sends a message that your organisation is thinking ahead, responsibly embracing AI, and staying in control of its data.

Final thoughts: collaboration over control

Shadow AI is not going away. At Eye Security, we believe that protecting Europe’s digital future means combining advanced security with open collaboration. This is why we are releasing this research prototype as open-source to accelerate learning, testing, and transparency across the community. We believe that together, we can find the right balance between innovation, compliance, and security.

The research prototype is freely available on GitHub. We invite CISOs, compliance leaders, and security practitioners to:

- Explore the repository and see how it works under the hood.

- Test it in your own environment and experiment with different file types and disclaimers.

- Contribute back and share your results, ideas, and improvements with the community.

👉 Explore the open-source framework now and join the conversation.

Let’s make AI awareness accessible for everyone.

.png?width=1600&name=Management%20-%20Blog%20thumbnail%20(1).png)